Measuring OS&H performance

Measurement and management

RoSPA suggests that one of the reasons why occupational safety & health receives less board level attention than other business priorities is because of the difficulty in measuring effectiveness in responding to what is a complex, multi-dimensional challenge.

In business 'what gets measured gets managed'. However, in the field of OS&H, it is far from clear whether 'key players' share a clear view of performance and its measurement.

Regulation 5 of the Management of Health and Safety at Work (MHSW) Regulations 1 contains a general requirement for organisations to monitor and review preventive and protective measures although the accompanying Approved Code of Practice (ACoP) to the Regulations 2 says little about appropriate approaches to monitoring - either actively or reactively. This is surprising given that Regulation 5 qualifies the duty to ".. put in place and give effect to suitable health and safety management arrangements.." by saying that regard must be taken of the nature of the employer's activities and the size of his undertaking.

Parts 5 and 6 of HSG65 3 give general advice on active monitoring and appendix 6 of HSG65 3 advises on the calculation and use of accident incidence and frequency to measure safety performance. Part 6 in particular suggests areas for the development of key performance indicators and refers to the value of 'benchmarking', on which there is now separate HSE guidance, Health and safety benchmarking – Improving together 4.

Annex E of BS 8800 5 provides a fuller explanation approaches to monitoring, stressing the value of pro-active and reactive monitoring and the need to select an appropriate range of indicators. It differentiates between 'direct' indicators of performance (e.g. accident rates) and 'indirect' indicators (such as health and safety training, compliance with systems of work etc), stressing that time often needs to elapse before the latter can affect the former and that linkage between the two 'may not be perfect'.

It also states "Accident data should never be used as the sole measure of OS&H performance" and "...organisations should select a combination of indicators as OS&H performance measures".

Guidance is given on objective/subjective and quantitative/qualitative performance measures as well as on measurement techniques. These include, for example, specific inspections and workplace tours using checklists, environmental sampling, behaviour sampling, and attitude surveys. There is also a section on hazardous event or 'near-miss' investigation.

Problems in current practice

Many organisations still make no attempt at all to measure OS&H performance.

Among those that do, use of 'direct' indicators to assess 'safety output' (e.g. injury rates) has dominated to the exclusion of other indicators such as measures of 'safety climate' or various aspects of the safety management 'process'.

The measurement of OS&H management 'process' using audit systems leading to a score or ranking, is now more common, particularly in larger, high hazard organisations. However, it is still not widely accepted as a measure for setting meaningful and measurable corporate targets for improvement. Instead, the use of 'output' key performance indicators such as lost time injury rates tend to dominate to the exclusion of other such indicators as:

- 'Near misses';

- Unsafe acts and conditions;

- Environmental indicators (measurement of airborne contaminants, noise, vibration etc); and

- Work related health damage.

Generally speaking, performance assessment tends to focus on safety and exclude health considerations.

Common terms used to express 'safety' performance include:

- Numbers of accidental injuries per year;

- Rates of accidents per 100,000 employed;

- Frequency of accidents per million person hours;

- Days lost due to injury per year;

- Severity rate (ratio of major to minor outcomes);

- Estimated accident costs.

Lost time injury rate

Many companies in the UK have adopted 'Target Zero' as a focus for health and safety performance and a motivator for staff. In such organisations, the length of time since their last 'lost time' or 'medically treated' work related injury is given special significance as the principal indicator of success or failure in health and safety management. While many argue that every accident can be prevented, in reality, especially in very large organisations, some level of error leading to harm is probably inevitable.

The obvious limitation of a single focus on lost time injury rates is that it shifts attention of away from other unplanned events with the potential to cause injury, including 'near misses' and 'unsafe acts and conditions'. It also excludes injuries to persons not employed (e.g. the public). Furthermore, the exclusive use of lost time injury rates can be an extremely limited because it reveals nothing about whether the underlying management processes are appropriate or adequate. The real causes of prevention failure are invariably deeply rooted in the ineffective management of operations, which includes failure to control behaviour and change attitudes.

In its defence it is often argued that lost time injury rates are a simple measure that all workers can understand. Research suggests that there are predictable ratios or 'accident triangles' which describe the relationship between lost time injury rates and the incidence of events such as minor injuries and non-injury accidents 3. The use of such modelling needs to be approached with caution: the ratios involved do not apply to all scenarios. In fact HSG65 3 specifically cautions against this in relation to measuring effectiveness in managing major hazards. (For example, success in preventing slips, trips and falls does not automatically imply success in managing large toxic, flammable or explosive inventories!).

Also, whether or not an injury leads to lost time is affected by operational, economic and social pressures. Even in well managed businesses there are problems of under-reporting.

There are many problems associated with the interpretation of changes in accident or injury rates. Accidents are low frequency events which require sophisticated statistical analysis. Often this is not appreciated, leading to the mis-interpretation of changes in accident statistics, for example, when considering the incidence of accidents in small organisations. An informed observer would want to assess whether small increases in numbers of accidents are part of a more generalised pattern of health and safety management failure or whether they are within the limits of random variation.

A further criticism that can be levelled at the use of lost time injury rates as a single performance measure, is that, this ignores issues such as work related ill health and unsafe conditions such as the unacceptable exposures to health hazards. Health damage is generally a bigger issue than accidental injury but health performance indicators are harder to identify and quantify. HSE estimate that over 12,000 deaths each year have been caused by past exposure to hazardous working conditions 6 is at least one (if not perhaps two) orders of magnitude greater than death due to workplace accidents (although much of this occurs after those affected have ceased employment).

Some may seek to argue that good health and safety management which produces a low a lost time injury rate is more likely to address health protection as well. But an absence of accidents cannot be taken to imply a low rate of work related ill health since neither modelling nor data are available to support this. Many organisations regard their sickness absence rates, in part at least, as an indicator of OS&H performance. However, most sickness absence is due to non-work related ill health.

Auditing health and safety management systems

'Direct' output performance measures such as lost time injury rates are often seen as the most valid indicators of success and failure in accident prevention ('the proof of the management system pudding'). Yet in many ways monitoring 'indirect' OS&H 'process' indicators is fundamentally more important. Output indicators focus on how much an organisation has 'got wrong'. Such measures of relative failure need to be set against what an organisation has 'got right'. This can be achieved by measuring the integrity and performance of health and safety management systems. The results of periodic and well structured OS&H management systems auditing can provide a more valid measure of performance over time than use of a reactive measure such as lost time injury rates (although fundamental research is still needed to assess the extent of any relationship between audit scores and such performance data).

There are many approaches to auditing, as seen by the range of proprietary systems available. There is also confusion about what constitutes auditing, with many tending to conflate the apparently similar (although in HSG65 3 terms, distinct) concepts of 'monitoring', 'auditing' and 'review'. In part this is because the auditor, like the manager measuring performance, does indeed collect evidence through observation, interview and tracking procedures, and does review performance against targets. What is often not so clear is that the OS&H auditing, like financial auditing, constitutes an external, independent, sampling check on existing systems which themselves embody measurement, checking and periodic review.

Some auditing systems, like RoSPA's health and safety systems auditing package, 'Quality Safety Audit' (QSA), are based directly on HSG65 3 and involve use of question sets which probe the integrity and performance of management systems horizontally, while including some vertical 'verifiers'. Other systems take the form of vertical compliance audits based around certain activities or pieces of legislation.

Whatever approach is adopted, a key principle should be that health and safety management auditing should not just check on the effective elimination or control of risks by specific preventive measures but should assess the completeness and operation of key elements in the health and safety management system. This means gathering evidence from documents, from observation and from interviews to assess the adequacy and implementation of elements such as policy, organisation, planning and implementation, monitoring and review - and ensuring that, in practice, they operate as a system which 'locks on' to potential problems and deals with them before harm occurs.

Measuring in this way enables the duty holder and other players to assess the strengths and weaknesses in existing management arrangements, including gaps between 'theory espoused' and 'theory practised' and to assess differences in management performance between (and within) undertakings and over time.

HSG65 3 contains useful advice on the case for auditing and the issues to be considered in selecting and/or preparing for audit. Further guidance could be useful, for example, at a sector level, to help businesses develop their overall approach. At present auditing is used mainly as an internal management technique, providing diagnosis of areas for improvement. But its outputs can also be used more widely in furnishing evidence of health and safety management capability and performance to others - for example, to clients, business partners, investors, insurers, shareholders, workforce representatives and enforcing authorities. It is also used as a basis for certification (see below).

Involvement in carrying out audits can also increase understanding of health and safety management, for example, when managers and workforce representatives are jointly involved in evidence gathering and analysis and when the results of audits are considered by joint health and safety committees.

With the exception of some high hazard industries, there is currently no legal requirement for organisations to audit health and safety management systems. Auditing is used by HSE inspectors, both in enforcement and in developing initiatives within companies and sectors. Although there may be an implication within Regulation 5 of the MHSW Regulations 1 that companies should undertake audit, there is a strong case for making this an explicit requirement.

RoSPA is arguing for a clearer interpretation of regulatory requirements for auditing, moving beyond the explicit auditing requirements of 'safety case' regulations and providing authoritative advice on this in the MHSW ACoP 2. Alternatively HSE could be given the power to require independent auditing, for example, in high risk organisations or activities or following convictions for health and safety offences.

There remains a major challenge in developing approaches to audit which are appropriate for small organisations. It could be argued that the need of the small firm to assess safety input or 'process' can be adequately met by simple periodic inspection or review. On the other hand, there may be value in their involving auditors to provide an external view. This may be particularly important where risks are substantial or safety depends on rigorous adherence to set procedures.

A health and safety management standard?

OHSAS 18001 (launched in 1999) is a health and safety management system 'standard', certification to which is also based on auditing.

However there are concerns, including:

- The fact that a 'standard' is unnecessary given the authoritative guidance already available in HSG65 3;

- The level of competence required of auditors - a critical issue - is not specified;

- There is the danger of too great a reliance on scrutiny of documents rather than evidence gathered by interview and observation;

- Given this bias, 'certification' to such a 'standard' cannot of itself attest to high or improving standards of overall performance, only basic standards of administrative consistency;

- 'Certification' is likely to be pushed inappropriately to clients by certifying bodies (many with little previous involvement in OS&H), leading possibly to additional costs, bureaucracy and little real added value;

- When required by clients in the contracting context, this in turn may violate principles of 'good regulation' and thus may serve only to damage perceptions of health and safety in general; and

- The significance of basic 'certification' on these lines is likely to be oversold by both 'certificating bodies' and the 'certificated'.

It seems clear to RoSPA that companies will need to be encouraged to prove to themselves and others that they:

- Have the essential elements of a health and safety management system in place;

- Are on a path of continuous improvement;

- Are measuring progress against plans and targets; and

- Are learning from their health and safety experiences

Organisations need to consider carefully how they can best furnish evidence to key audiences of their capability to manage health and safety. Small firms, for whom requirements for extensive documentary evidence will not be appropriate, need to be encouraged to develop approaches which are proportionate to their circumstances (for example, reporting against a simple health and safety action plan).

RoSPA takes the view that developing consensus about health and safety management system standards and auditing (and health and safety performance measurement generally) is going to be vital.

Measuring OS&H 'culture'

While the management 'systems' view of OS&H focuses heavily on the formal features of proactive health and safety management, there is an increasing understanding that the effectiveness of systems depends in practice on the creation and maintenance of a robust health and safety 'culture' at the workplace. BS 8800 5, for example, stresses that the success of formal health and safety management arrangements depends heavily on 'culture and politics' within organisations and that OS&H 'culture' is a subset of an organisation's overall 'culture'.

Although the concept of 'OS&H culture' may lack some degree of intellectual rigour, it can be defined as a shared understanding within an organisation of the significance of health and safety problems and the appropriateness of measures needed to tackle them. HSG65 3, also talks of culture in the context of 'control', 'co-operation', 'communication' and 'competence'.

The HSL has developed the HSL Safety Climate Tool (SCT) to enable organizations to gain invaluable insight into their 'safety culture' and take the next steps to improve it. The results are designed to help identify strengths and weaknesses. These can then be compared, for example, with results from more formal audit processes. The results can present challenging findings to senior managers and organisations using it need to be prepared to embrace its findings in a positive way.

An holistic approach?

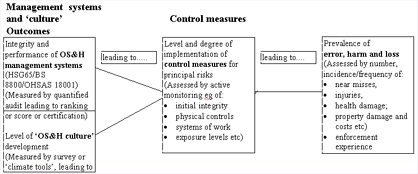

It should be clear from the above discussion that OS&H performance is multidimensional. No single measure provides an overriding indication of an organisation's success or failure in managing work related risk. A more holistic approach is required based on the assembly of an 'evidence package' composed of 'leading indicators' (such as measures of 'culture' and measures of the integrity and performance of management systems) linked to 'lagging indicators' such as control standards (and their implementation) for principal risks. These in turn can be related to further 'lagging, output indicators' such levels of error, harm and loss (see figure 1 below).

In practice measuring all these parameters effectively poses a number of challenges. Some can be measured by continuous whole population monitoring, some only by using sampling techniques. There are likely to be many factors affecting the practicability and efficacy of measurement in each case. And there is always the danger that false significance will be attributed to that which can be measured because what is truly significant still defies measurement. Care has to be taken when seeking to interpret the significance of trends in each area and when seeking to relate them to one another.

Nevertheless, organisations which are currently assessing corporate performance using single measures, such as accident rates, should be encouraged to adopt a more holistic approach, using an appropriate selection of indicators. While integration of such measures into a single performance measure is unlikely to be possible (or indeed meaningful), read together, they can still provide powerful data to evaluate progress within and between organisations and over time.

Targets?

In practice the term 'target' tends to be used quite loosely alongside the related (although arguably distinct) concepts of 'mission', 'vision', 'aspiration', 'aim', 'goal' and 'objective'. Target setting, if it is to be useful however, has to be based on good data, robust analysis and a sound understanding of the processes through which improved risk management can be achieved. At a corporate or divisional level it needs to be based on a sound understanding of the causes and 'preventability' of each accident and instance of work related ill health. There is also the need to establish sound 'baselines'.

The reasoning underpinning targets and how they were arrived at needs to be more widely shared so that all those involved in target setting, whether across a whole company or sector or at a departmental level within a business, can compare their approach with that adopted by others. When setting 'output' performance targets therefore, besides focusing on reductions in lost time or medically treated injuries, organisations also need to look at indicators such as:

- 'Near miss' rates,

- Reductions in exposure to harmful agents in the work environment (e.g. airborne contaminants, noise, radiation etc) and

- Reductions in exposure to harmful burdens (physical, psychological).

More importantly perhaps, they also need to be able to identify meaningful OS&H management 'process' targets.

If targets appear to be 'plucked from thin air', not only will they will lack transparency, meaning and credibility but they will not secure workforce and management 'buy-in'. As with good budgeting, targets will not be robust unless they are based on a rigorous 'ground up' approach in which, at each stage, the key stakeholders are subject to challenge on their estimates.

Members of the workforce, as a keepers of the knowledge about working conditions and possibilities for change, should be consulted by employers as required by legislation via appropriate structures (eg safety committees). They should also be given opportunities to initiate debate themselves and to compare notes with colleagues within and across their sectors or with others outside. Targets which merely assert unrealistic goals but without indicating the means by which they can be achieved serve no useful purpose and need to be challenged.

If it turns out that the wrong targets have been selected, then they need to be changed or adjusted, particularly if duty holders merely seek to deliver against them rather than the real, underlying objectives (for example, target driven managers asking themselves, 'did the accident really qualify as 'lost time?').

References

- Management of Health and Safety at Work (MHSW) Regulation 5

- Management of Health and Safety at Work Regulations 1999 Approved Code of Practice and guidance

- HSG65 - Successful health and safety management

- Health and safety benchmarking. Improving together

- BS 8800:2004 Occupational health and safety management systems

- HSE Statistics